Language Models Represent Space and Time

#16: XGBoost 2.0, Decoding speech from brain recordings, LLMs with pause tokens

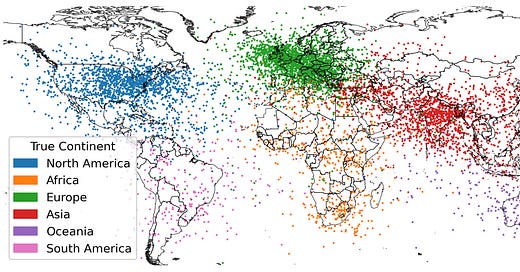

Language Models Represent Space and Time

This paper discovers that LLMs learn linear representations of space and time across multiple scales.

This paper also identifies individual “space neurons” and “time neurons” that reliably encode spatial and temporal coordinates.

XGBoost 2.0

XGBoost 2.0 is here with support for

Multi-target trees

Learning to rank problems

Quantile regression

PySpark interface

External memory support

Learn more from the release notes.

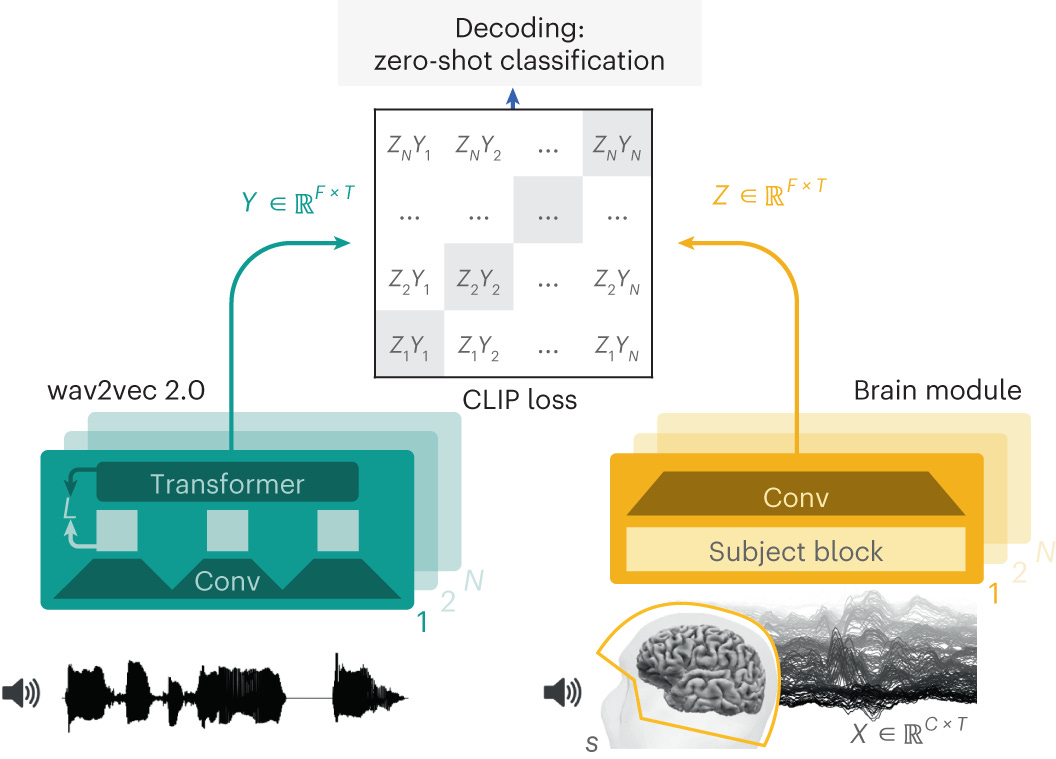

Meta’s decoding speech from brain recordings

Inspired by CLIP, the model uses contrastive loss betweeen representation of the brain signals and representation of candidate audio segments.

This paper got published in the Nature machine intelligence and the code can be seen here.

Paper: Training Language Models With Pause Tokens

Language models can improve their performance by delaying the generation of the next token in a sequence and allowing the model to manipulate more hidden vectors before producing an output.

This delay is implemented using a learnable "pause token" that is appended to the input, and the model's outputs are extracted only after the last pause token is encountered during inference.

Read more about it in the paper.