A Hackers’ Guide to Language Models

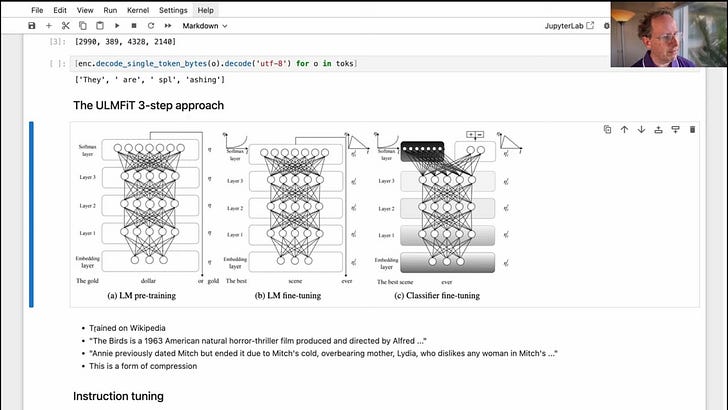

Jeremy Howard, presents a comprehensive video journey through the landscape of LLMs, covering foundational concepts, architecture, practical applications, and hands-on tips for using the OpenAI API.

The video delves into advanced topics such as model testing and optimization with tools like GPTQ and Hugging Face Transformers, explores specialized datasets for fine-tuning like Orca and Platypus, and discusses cutting-edge trends in Retrieval Augmented Generation etc.

Prompt Engineering for 100K context model

A blog that shares the prompting guidance techniques to improve the Claude’s recall over long contexts

The techniques that helped are

Extracting reference quotes relevant to the question before answering

Supplementing the prompt with examples of correctly answered questions about other sections of the document

Blog can be accessed here

What makes LLM Tokenizers different from each other?

In the video, Jay demonstrates the importance of tokenizers as crucial components of Large Language Models (LLMs) by comparing how different tokenizers handle a complex text

Jay's analysis involves passing the text through various trained tokenizers to highlight their strengths and weaknesses in encoding diverse content, shedding light on the design choices made by different tokenizer implementations

PyCon India

PyCon India is happening this upcoming weekend in Hydrerabad that covers a lot of interesting AI topics.

Today is the last date to register for the event. Check out the website here.